Model Statistics Overview

The Model Statistics page provides clarity and information about machine learning models, specifically aimed at making the complex world of models more understandable, even for non-technical users. After every model training session, various statistics and graphs become available to help users interpret the model’s behavior and predictions. Currently, this functionality is available for Churn and Response Prediction models.Explainability with SHAP Values

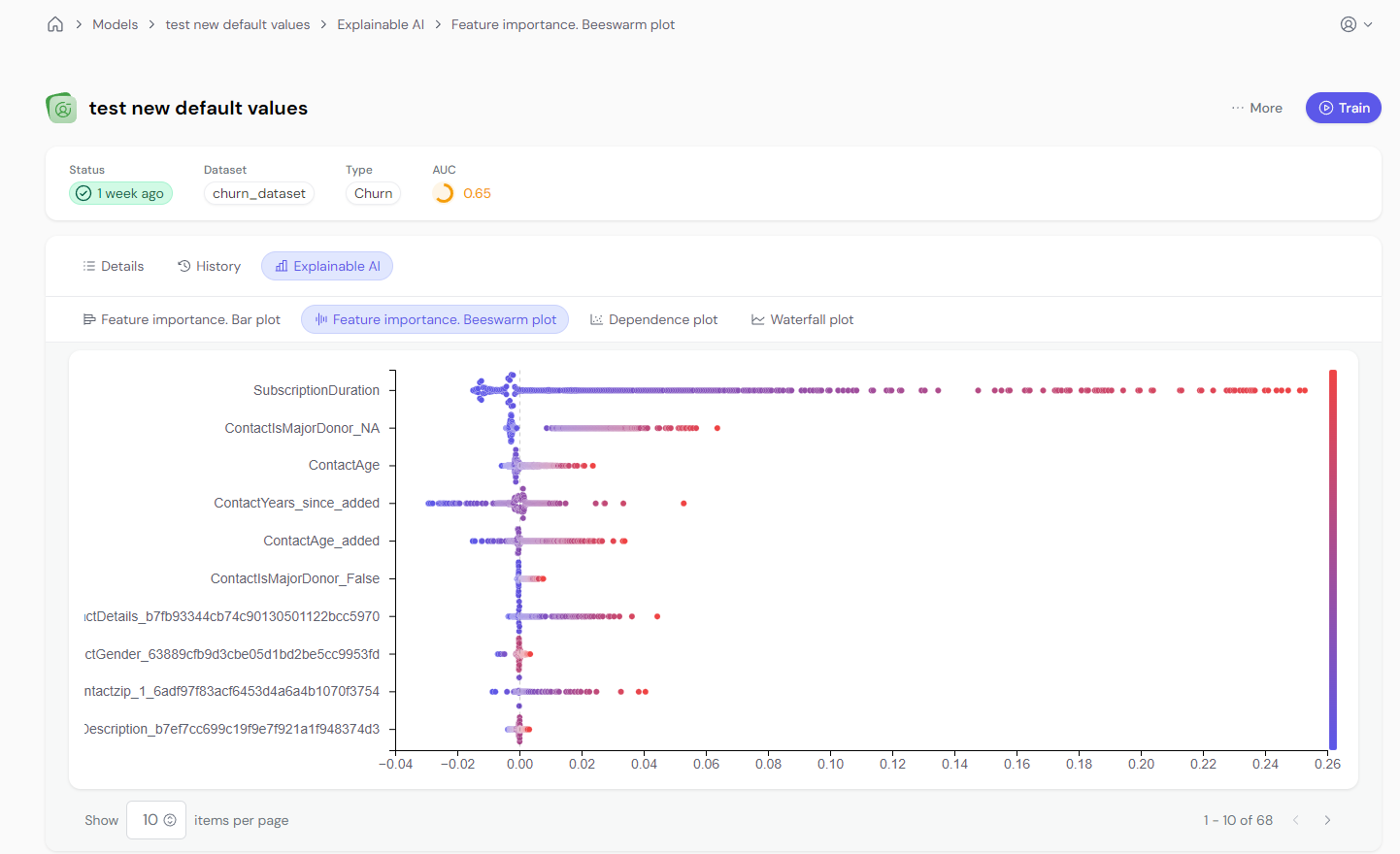

The second part of this page is dedicated to model explainability using SHAP (SHapley Additive exPlanations) values. SHAP values provide insight into how the model makes predictions by attributing the importance of each feature.Tabs Structure

Use tabs to organize different types of SHAP plots:- Feature Importance

- Beeswarm Plot

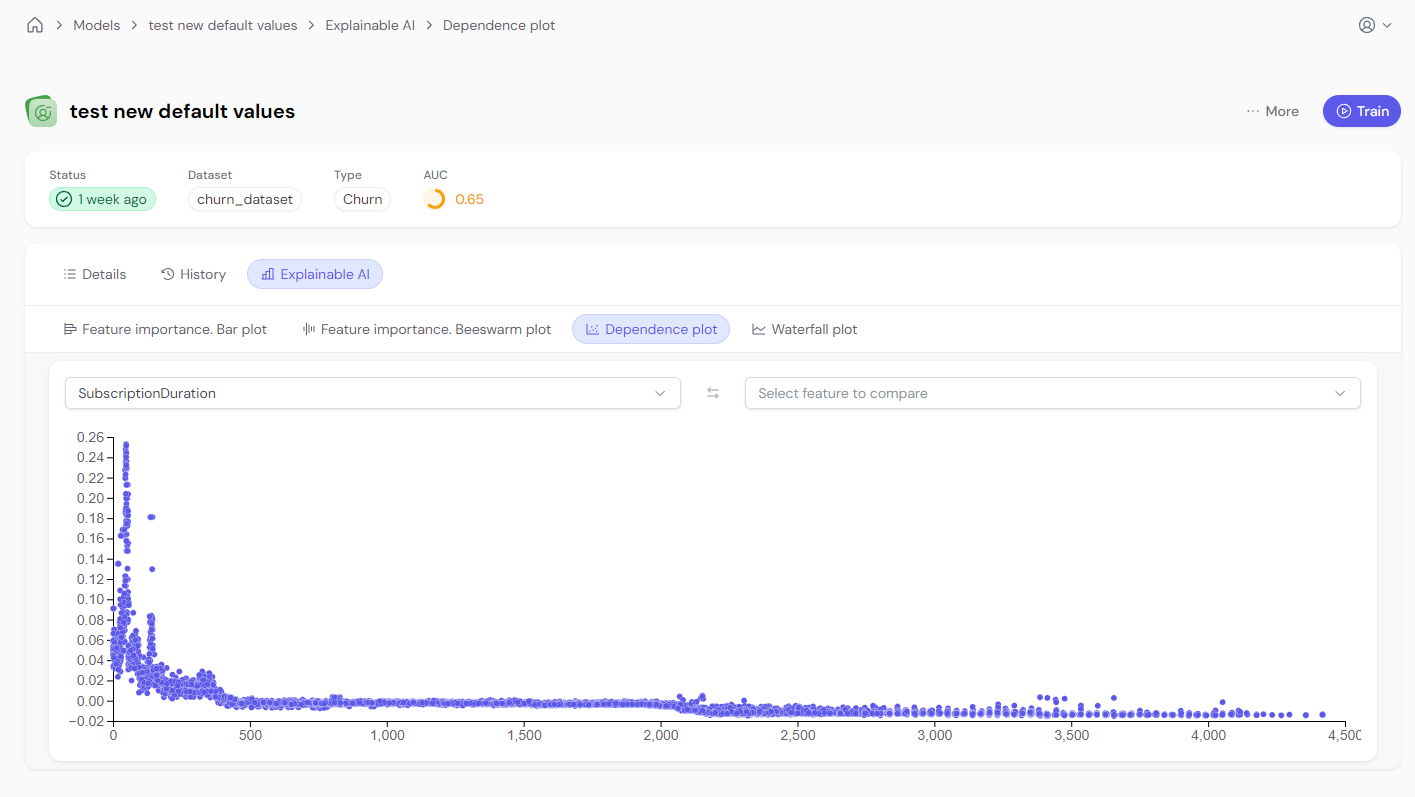

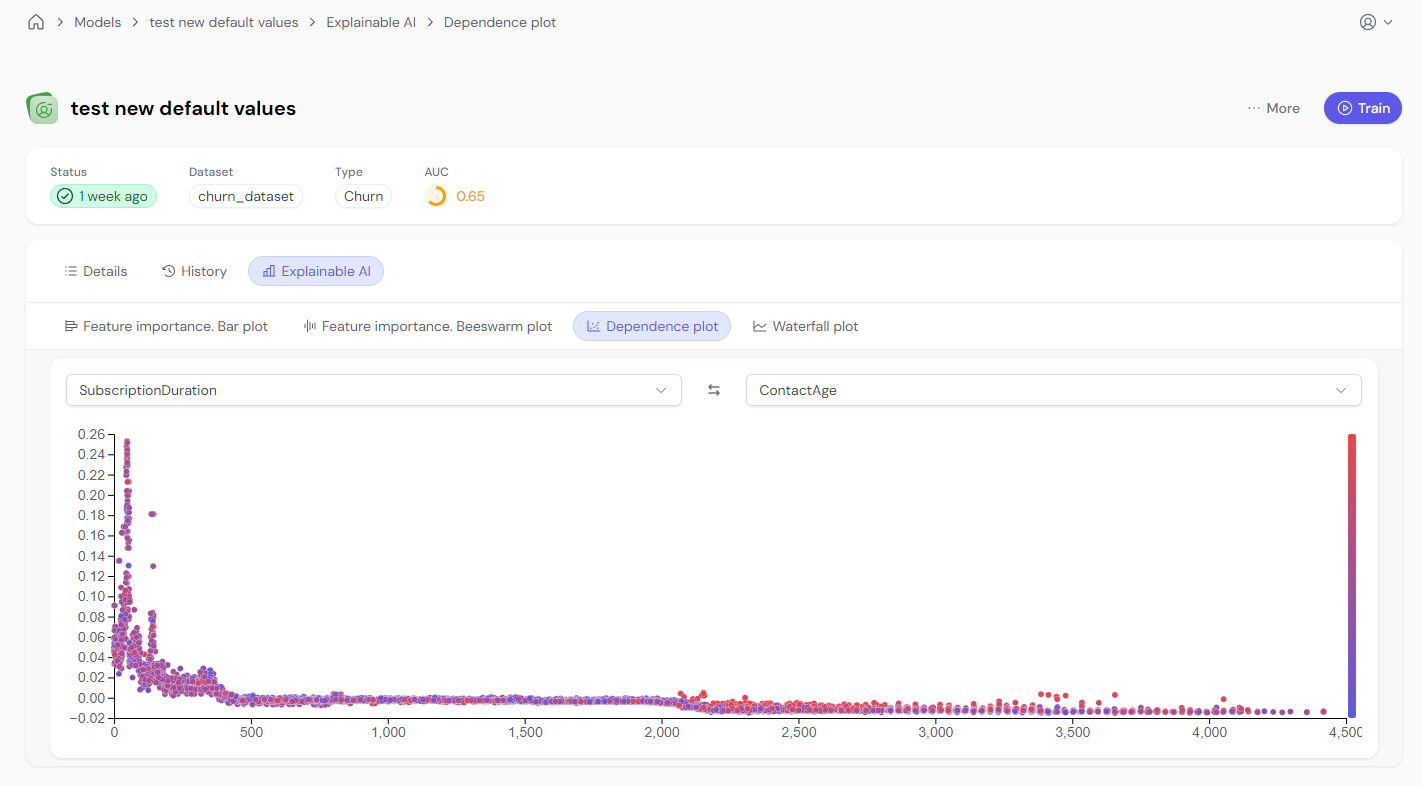

- Dependence Plot

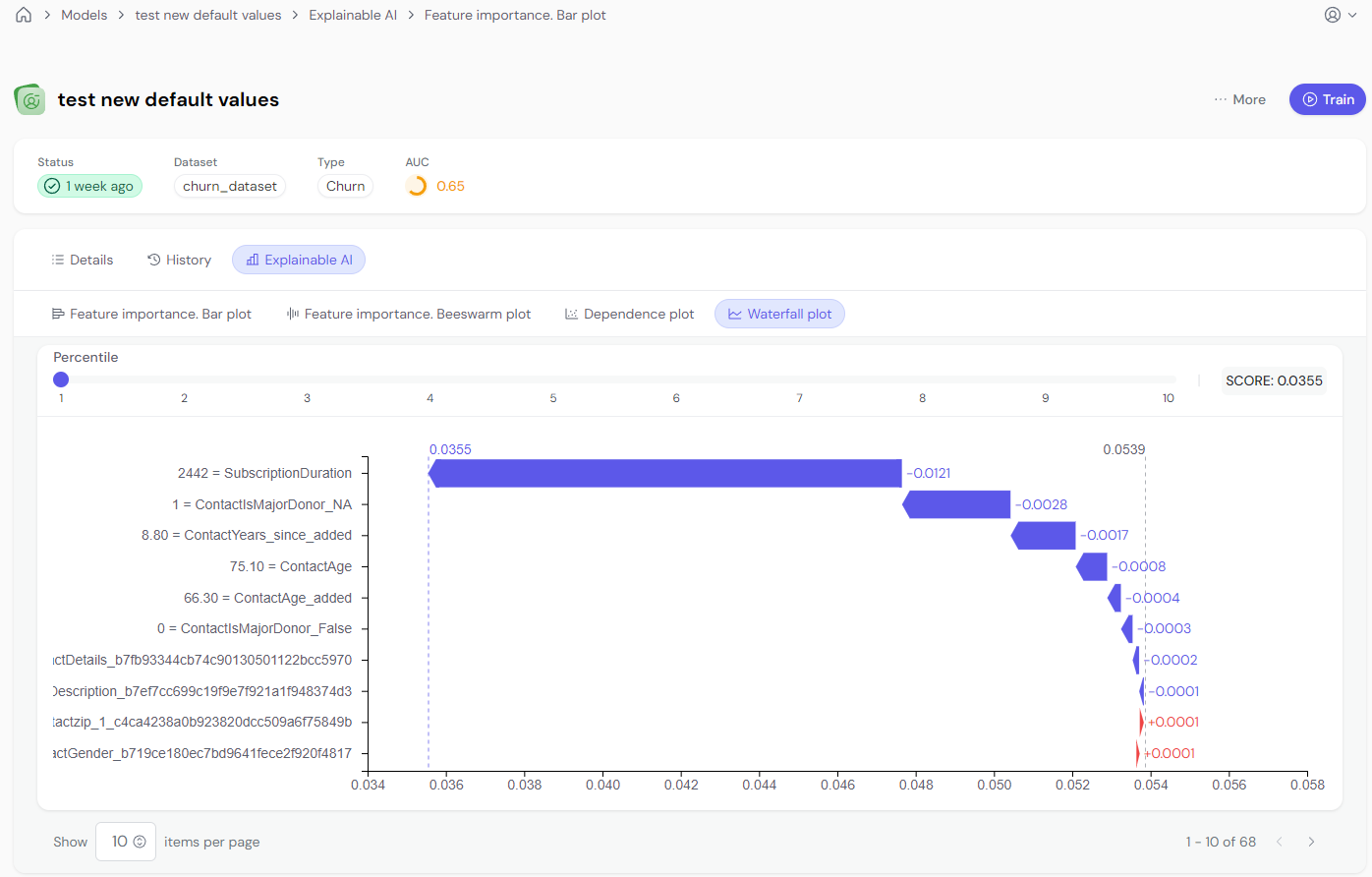

- Waterfall Plot

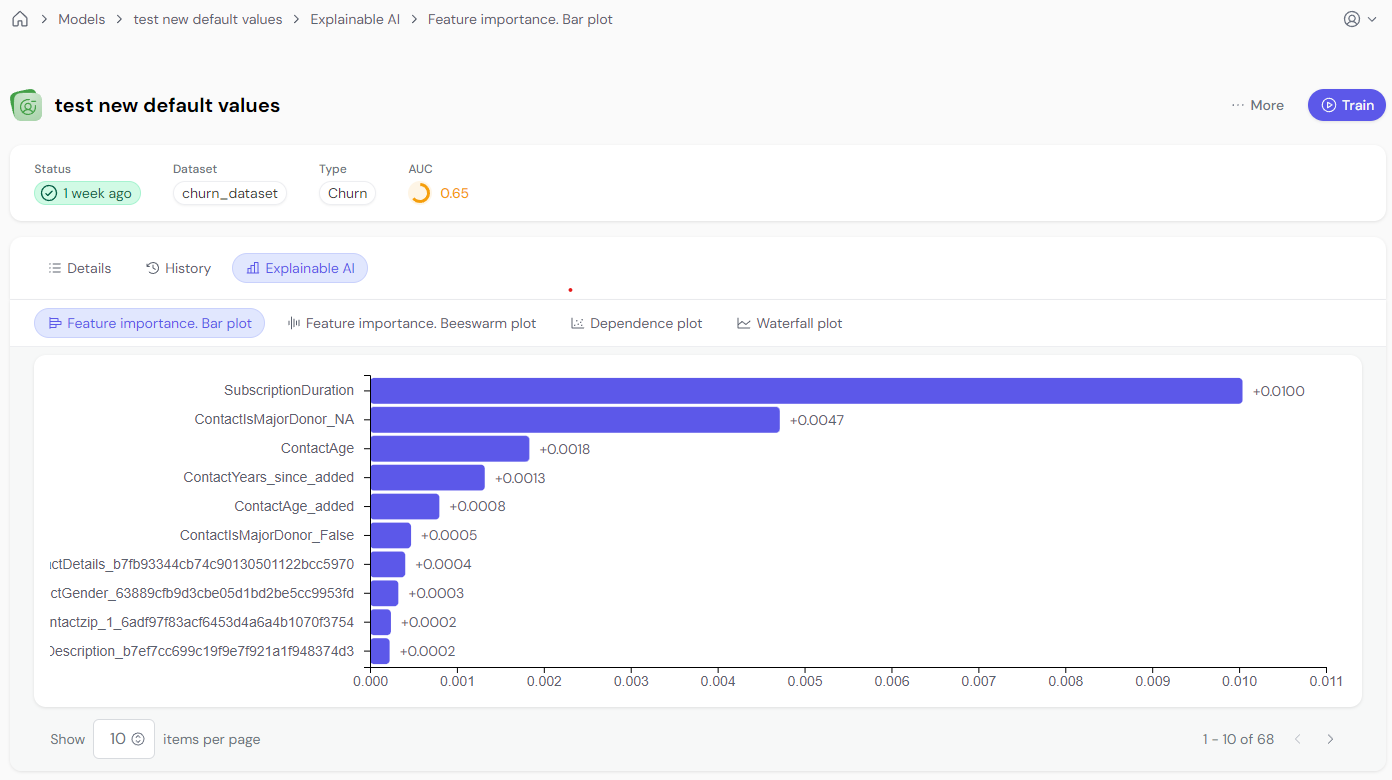

Feature Importance Bar Plot

A bar plot displays the global importance of each feature, indicating which features the model used most to make predictions. The size of the bar reflects the importance, but remember: larger bars don’t mean larger SHAP values—it just means those features were important to the model.This plot shows which features the model considers important, helping users understand the most influential factors. Remember, higher bars mean higher importance, not necessarily higher SHAP values.

Performance Metrics

The first section provides key performance metrics for each model after training. The most important metrics include:- AUC-ROC (Area Under the Receiver Operating Characteristic Curve): Measures the model’s ability to distinguish between classes. A higher value indicates better performance.

- AUC-PR (Area Under the Precision-Recall Curve): Focuses on how well the model performs when dealing with imbalanced datasets. It shows precision (positive predictive value) vs. recall (true positive rate).

AUC-ROC

Area Under the Receiver Operating Characteristic Curve. Indicates how well the model distinguishes between classes.

AUC-PR

Area Under the Precision-Recall Curve. Measures performance with imbalanced data.